While everyone is moving to the cloud and modern software solutions, we still have many legacy systems with traditional integration approaches like uploading/downloading data via FTP(File Transfer Protocol) or SFTP(Secure Shell File Transfer Protocol). AWS recently announced a managed service for SFTP transfers which can be used as a bridge between the cloud and legacy applications.

Standard Approaches

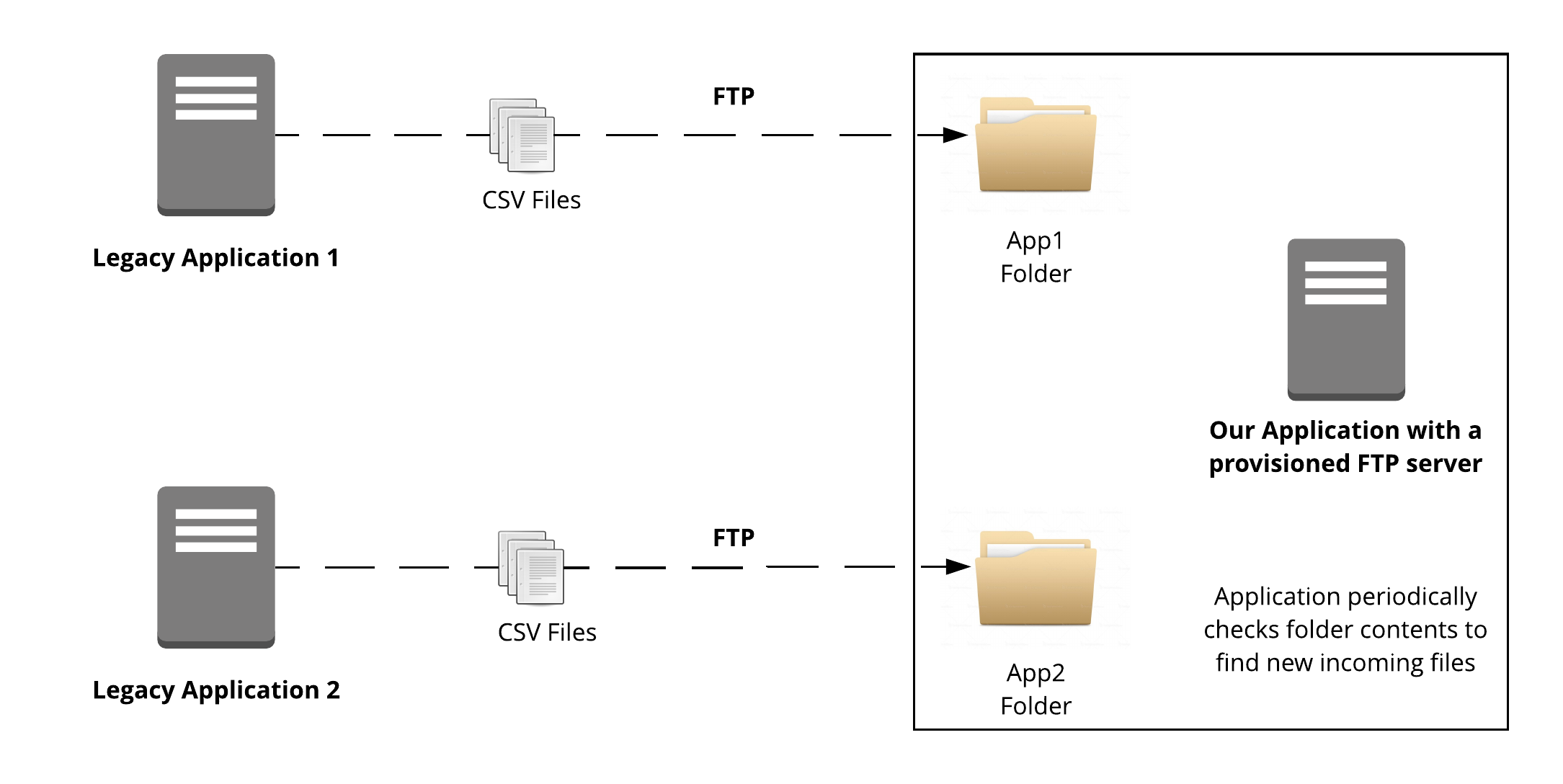

Usually, FTP integration works like this:

Remote applications “Legacy Application 1” and “Legacy Application 2” need to transfer some data to our application. An FTP connection is established to an FTP server, deployed to the same server, as our application. Each of the legacy applications has a dedicated folder that matches the app name (“App1 Folder” and “App2 Folder”).

Remote applications “Legacy Application 1” and “Legacy Application 2” need to transfer some data to our application. An FTP connection is established to an FTP server, deployed to the same server, as our application. Each of the legacy applications has a dedicated folder that matches the app name (“App1 Folder” and “App2 Folder”).

While such architecture is viable, it has too many flaws, especially if having a reliable integration is a crucial requirement.

FTP server should be installed, properly configured and launched on the same server/VM as the application. There are number of decent open-source solutions available, like FileZilla, vsftpd, and others. Each solution requires a very careful configuration to achieve good performance and maintain a proper level of data security. A deeper understanding of operating system internals is needed to achieve things like encryption in transit and encryption at rest.

Furthermore, running own FTP server requires constant monitoring and installation of software updates, since a vulnerability in a component, responsible for data transfer and storage, can become a disaster.

Maintaining a simple software like an FTP server becomes a real problem once we start thinking more about security and performance.

Things are getting even trickier when we need to deal with an increased load. Do we need to scale both the application and the FTP server at the same time? How do we synchronize data on different FTP nodes? Should we use a load balancer?

A very similar setup is used for the SFTP integration:

Such setup has similar concerns and even more security-related challenges, for example, a proper configuration to bind the user to their home folder via SFTP.

Managed SFTP using AWS

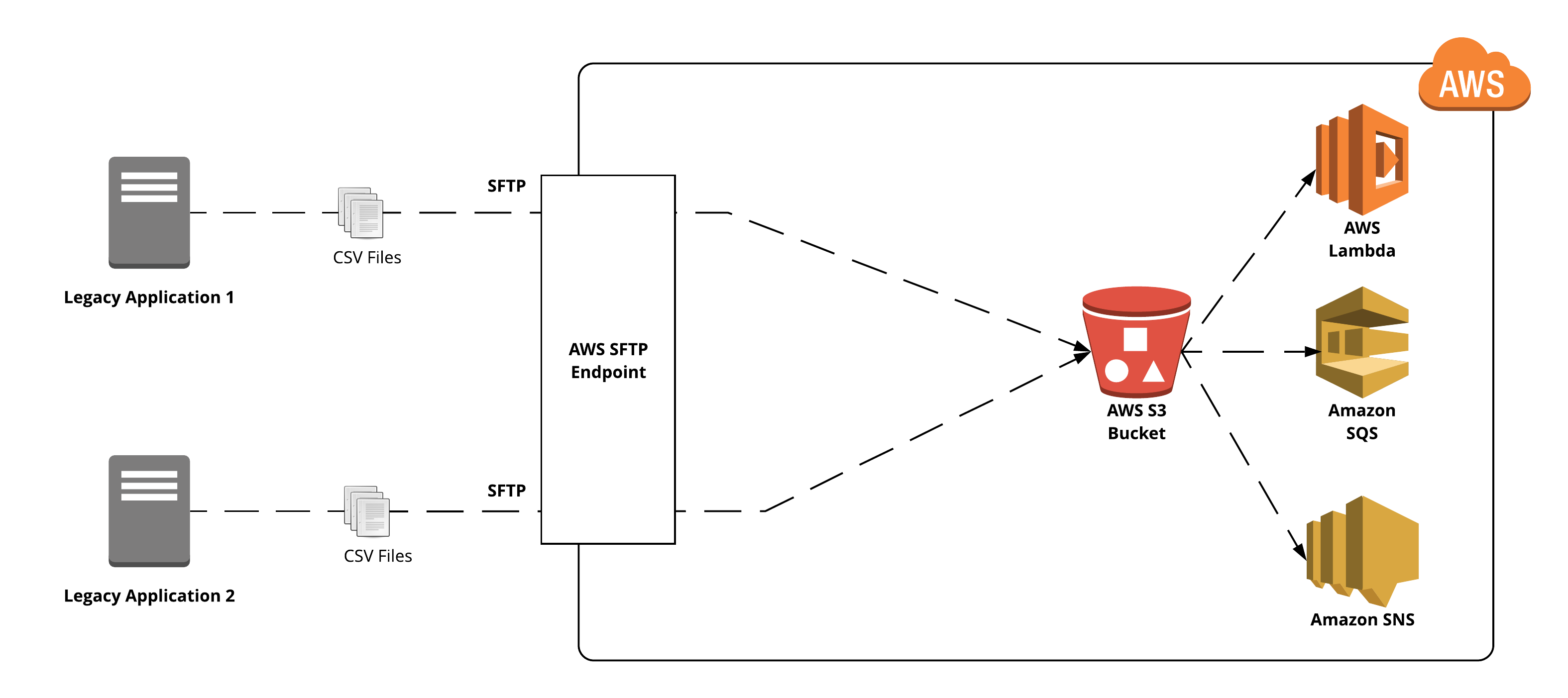

On the other hand, a managed SFTP service, provided by AWS is a complete opposite to a traditional approach - no servers should be provisioned, the configuration is simple, and the solution is scalable out of the box. Also, no polling is required since files, uploaded via SFTP are delivered directly to S3 bucket and it’s possible to configure notifications for new files. A basic setup looks like this:

As with the self-hosted FTP/SFTP server approach, remote applications “Legacy Application 1” and “Legacy Application 2” will be still using the secure SFTP protocol to deliver data to our system. But now, all delivery happens without a need of provisioning any servers. Instead, transferred data will automatically arrive to the S3 bucket. Then with a help of S3 Notifications it’s possible to send a push notification, add a record to the AWS SQS(and allow our application to process data in a scalable way). It’s even possible to process the file using AWS Lambda without any servers involved at all.

As with the self-hosted FTP/SFTP server approach, remote applications “Legacy Application 1” and “Legacy Application 2” will be still using the secure SFTP protocol to deliver data to our system. But now, all delivery happens without a need of provisioning any servers. Instead, transferred data will automatically arrive to the S3 bucket. Then with a help of S3 Notifications it’s possible to send a push notification, add a record to the AWS SQS(and allow our application to process data in a scalable way). It’s even possible to process the file using AWS Lambda without any servers involved at all.

IMPORTANT: AWS Transfer for SFTP supports only the SFTP protocol(SSH File Transfer Protocol). The FTP(File Transfer Protocol) is not supported. It means, an external system like ERP must support SFTP connectors.

Typical AWS SFTP Configuration

Let’s think about typical SFTP integration flows and create corresponding configurations in AWS.

Shared SFTP Space

In a case, if you need to set up a shared space and control access to it on per-user basis, the configuration is rather simple.

First, let’s create two IAM roles(access role and log role) and one policy. Each SFTP user can have a separate role and policy, but in this case access role and the policy can be shared among along user.

The SFTP Access IAM Role should have enough permissions to access required resources by the AWS Transfer for SFTP:

{ “Version”: “2012-10-17”, “Statement”: [ { “Sid”: “AllowListingOfUserFolder”, “Action”: [ “s3:ListBucket”, “s3:GetBucketLocation” ], “Effect”: “Allow”, “Resource”: [ “arn:aws:s3:::

” ] }, { “Sid”: “HomeDirObjectAccess”, “Effect”: “Allow”, “Action”: [ “s3:PutObject”, “s3:GetObject”, “s3:DeleteObjectVersion”, “s3:DeleteObject”, “s3:GetObjectVersion” ], “Resource”: “arn:aws:s3:: /*” } ] }

AWS Transfer will be accessing S3, so the following trust relationship is required:

{ “Version”: “2012-10-17”, “Statement”: [ { “Sid”: "", “Effect”: “Allow”, “Principal”: { “Service”: “transfer.amazonaws.com” }, “Action”: “sts:AssumeRole” } ] }

The SFTP IAM Policy for user should look like this(because all users share the same space, it matches the SFTP Access IAM Role policy):

{ “Version”: “2012-10-17”, “Statement”: [ { “Sid”: “AllowListingOfUserFolder”, “Action”: [ “s3:ListBucket”, “s3:GetBucketLocation” ], “Effect”: “Allow”, “Resource”: [ “arn:aws:s3:::

” ] }, { “Sid”: “HomeDirObjectAccess”, “Effect”: “Allow”, “Action”: [ “s3:PutObject”, “s3:GetObject”, “s3:DeleteObjectVersion”, “s3:DeleteObject”, “s3:GetObjectVersion” ], “Resource”: “arn:aws:s3:: /*” } ] }

For the SFTP Logging IAM Role, the CloudWatch access should be allowed. The policy document will look like this:

{ “Version”: “2012-10-17”, “Statement”: [ { “Sid”: “SFTPDemoLoggingPolicyDocument”, “Effect”: “Allow”, “Action”: [ “logs:CreateLogStream”, “logs:DescribeLogStreams”, “logs:CreateLogGroup”, “logs:PutLogEvents” ], “Resource”: ”*” } ] }

The same trust relationship is required:

{ “Version”: “2012-10-17”, “Statement”: [ { “Sid”: "", “Effect”: “Allow”, “Principal”: { “Service”: “transfer.amazonaws.com” }, “Action”: “sts:AssumeRole” } ] }

NOTE ON POLICY DOCUMENTS: In a case if the policy document is too long, the following error may be returned while establishing the connection with the SFTP endpoint:

…‘policy’ failed to satisfy constraint: Member must have length less than or equal to 2048 (Service: AWSSecurityTokenService; Status Code: 400…

Tenant-Based Access

In a case, when the user should only see their home directory, the policy should be updated accordingly:

{ “Version”: “2012-10-17”, “Statement”: [ { “Sid”: “AllowListingOfUserFolder”, “Action”: [ “s3:ListBucket”, “s3:GetBucketLocation” ], “Effect”: “Allow”, “Resource”: [ “arn:aws:s3:::${transfer:HomeBucket}” ], “Condition”: { “StringLike”: { “s3:prefix”: [ ”/${transfer:UserName}/”, ”/${transfer:UserName}” ] } } }, { “Sid”: “HomeDirObjectAccess”, “Effect”: “Allow”, “Action”: [ “s3:PutObject”, “s3:GetObject”, “s3:DeleteObjectVersion”, “s3:DeleteObject”, “s3:GetObjectVersion” ], “Resource”: “arn:aws:s3:::${transfer:HomeDirectory}” } ] }

As you may notice, we use variables, provided by the AWS Transfer service to create a scoped-down policy, that limits access to objects in a bucket based on the user name and their home folder.

IMPORTANT NOTE: Right now updating the policy in IAM won’t be automatically reflected in the SFTP user record due to caching. Once the IAM Policy was updated, all users referencing this policy should be updated.

Final Thoughts

AWS Transfers for SFTP is a great fully-managed alternative to a time-consuming configuration of on-premises SFTP server. It can also be used as a secure and easy-to-support integration endpoint, in a case if the external system supports the SFTP protocol.

It’s critical to understand that the SFTP is not a secure version of the FTP, but a completely different protocol, so in some cases, simple drop-in replacement won’t be possible.

Also, right now, a fully managed version of the service only allows authentication using SSH keys(username and password authentication is only allowed for custom identity pools).

Need help with your cloud architecture?

Schedule a Free Consultation