Hi everyone. In this blog post, I’d like to bring up the topic of using “AI” in SaaS both for improved customer experience and marketing.

Let’s be honest, many companies introduce LLMs and similar technologies because “they have to”. There is a certain pressure from potential customers or investors. It’s like if you don’t have “AI” in your product, it means you are far behind the technology revolution. Things get even worse if your competitor does have the “AI”.

Before we start, let’s figure out one thing. “AI” at this moment is more a marketing term vs technical term. In fact, we are dealing with different kinds of ML(Machine Learning) models. There are really powerful ML models available on the market. I’ll be using the “AI” term in the blog post since it’s better for attracting potential readers(everyone has their own agenda).

💡

The term “Machine Learning” is older than 90% of people reading this blog post, and recent advancements made ML-based products attractive to consumers.

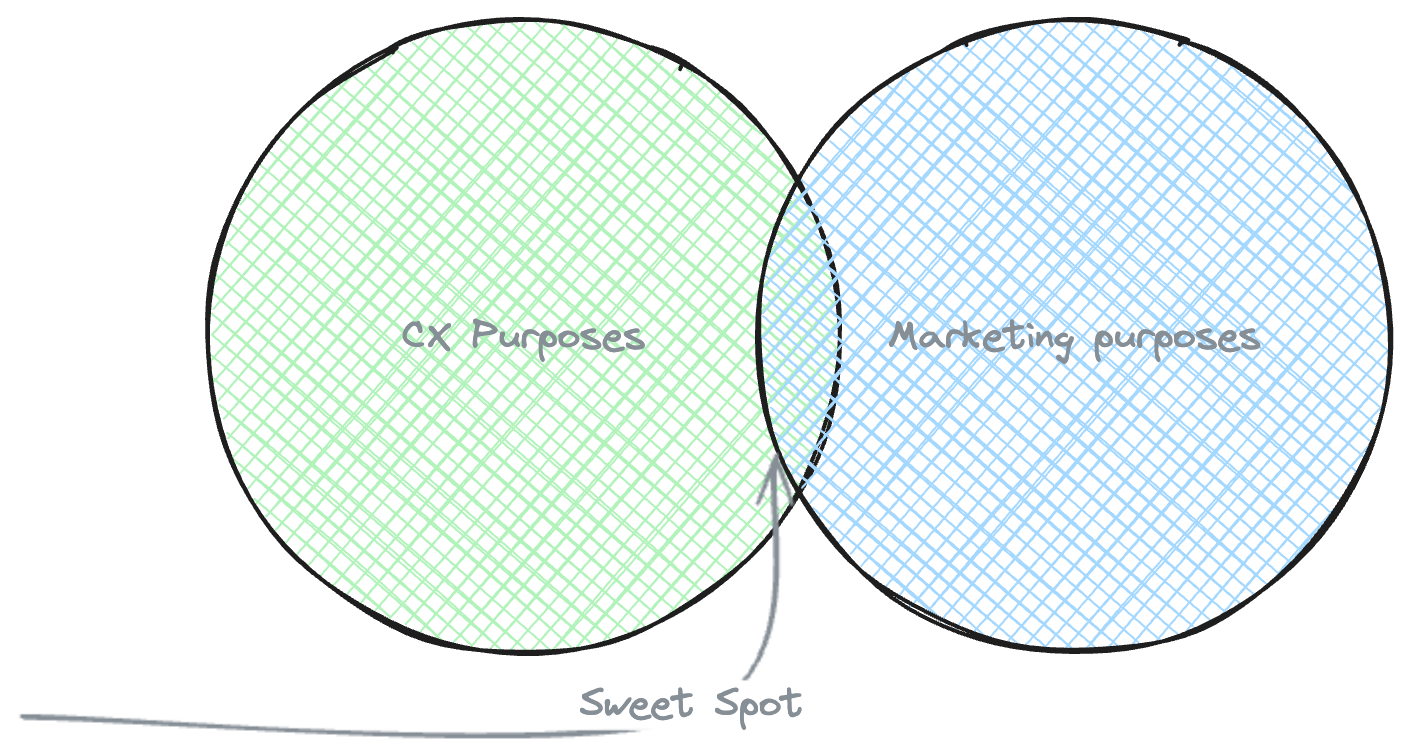

Now that everyone offended stopped reading the blog post, I can continue with it. So what would be the ideal case to introduce the AI into your SaaS at this moment? We can’t ignore the fact AI hype results in traction, so for sure it will benefit marketing. On the other hand, using something simply to be able to tell you have it is also dumb. As always, the sweet spot is somewhere in between.

Ideally, adding AI capabilities should both improve the customer experience and make the marketing team happy. I propose to walk through some “standard” areas where AI-based technologies can be beneficial both for customers and products.

Ideally, adding AI capabilities should both improve the customer experience and make the marketing team happy. I propose to walk through some “standard” areas where AI-based technologies can be beneficial both for customers and products.

Humans vs Machines

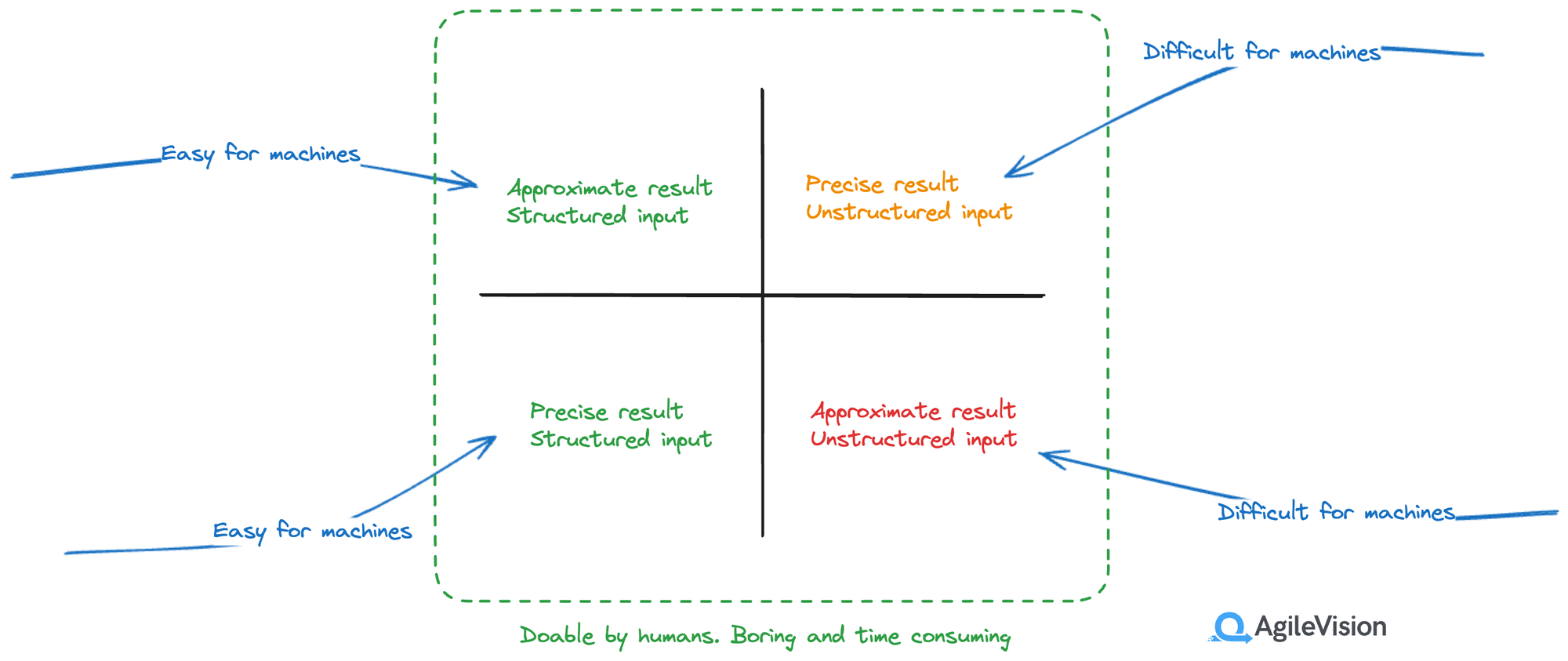

There are certain tasks which are easy for humans and difficult for machines. It’s defined by the nature of the input data and the expected precision of output.

Machines excel at producing precise results from structured inputs, while humans can deal with unstructured data. Nothing prevents humans from handling large volumes of structured data, but it’s overwhelming (that’s why we have machines in the first place). The same goes for large volumes of unstructured data, in fact.

AI allows us to transform diverse data sets into a single format suitable either for further processing by machines or ready to be consumed by humans.

What’s important to understand about current AI trends is the fact the focus is made on foundational models. Those kinds of ML models are trained to support a wide class of use-cases in many domains, for example, language models (Google BERT, GPT-*, etc), image models (DALL-E), video models(Sora).

What’s important to understand about current AI trends is the fact the focus is made on foundational models. Those kinds of ML models are trained to support a wide class of use-cases in many domains, for example, language models (Google BERT, GPT-*, etc), image models (DALL-E), video models(Sora).

Such models are usually trained by it’s creators on a huge dataset and require enormous computational resources for training. Often, resources needed to train such model can’t be afforded by anyone except for large corporations like Microsoft, Google, OpenAI or Meta.

The biggest benefit of using modern foundational models is the ability to quickly introduce features which before required a lot of training and fine-tuning of problem-specific ML models.

Use-cases for AI in a B2B SaaS

Now it’s time to proceed with the actual reason this blog post was written. What do we know about B2B SaaS applications?

- Users can be overwhelmed with the number of tasks they are dealing with and this input data may be incomplete, untidy or missing. One of the B2B SaaS challenges is to avoid disruption of business and improve teams performance.

- B2B SaaS often model complex business workflows which include repetitive and time-consuming tasks

- B2B SaaS may require integration with many external systems, sometimes those can be legacy systems which don’t know anything about modern data formats like JSON.

- B2B SaaS often deal with a variety of different objects and data types. Finding a desired item may be difficult and simple pattern matching may not be enough.

- B2B SaaS process large volumes of data which may be a foundation for business-critical metrics. Metrics interpretation can be difficult for end-users.

Based on these facts, we can think about possible AI application in order to impove overal customer experience of B2B SaaS users.

Input data cleanup and normalization

Business workflows are complex. The input to be provided for ERP/WMS or OMS can be daunting. Humans can get tired, bored and thus clumsy. In other cases, fields like descriptions may be filled in by many workers with different literacy levels or language mastery.

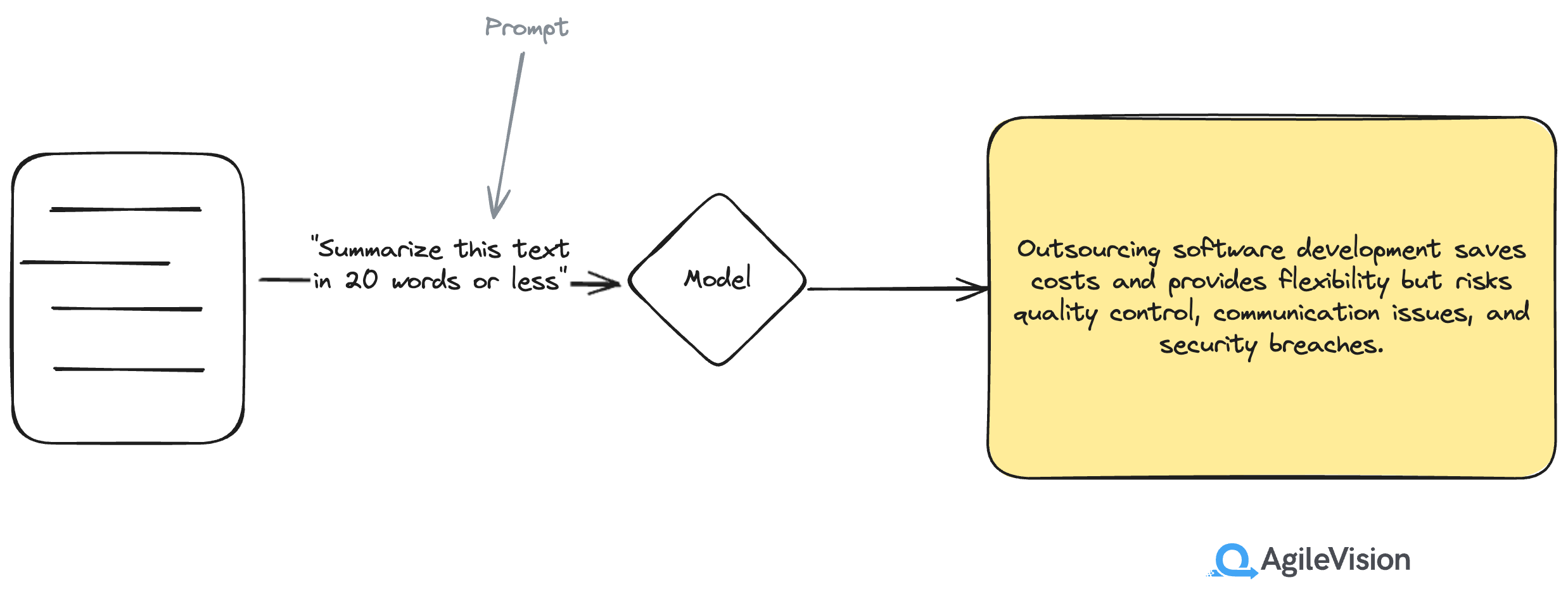

The catch is customers may be upset with the data from the supplier because it’s inaccurate, untidy, contains grammar errors or simply too detailed. LLMs are very good at transforming one text into another one. Why not to use it for better customer experience? Let’s assume we need to review a large number of documents. We can skim through each document and create a short summary so we can easily remember what’s document about. Or we can use LLM

🎯

Customer experience value: 4/5 Marketing value: 5/5 Implementation effort: 1/5

Input cleanup, summarization or fixing grammar errors is rather easy to implement using LLM. From the customer experience perspective, it can be a substantial performance boost and avoiding boring, repetitive, and time consuming tasks.

Unstructured input parsing

There is a famous article from Paul Graham “Do Things that Don’t Scale”. One of the chapters is about doing manual work which can be automated later. In some cases, B2B SaaS founders have to do a lot of manual work for their customers in order to delight them. Humans in such case serve as a workflow automation engines of some kind.

With the recent development of LLMs, a huge effort has been made to allow enforcing structured output from models. This is actually a big deal, because as we already saw, machines are very good at processing structured input. What does it mean for SaaS? New possibilities in data processing! Since we can enforce structured input, we can use a machine-readable format as an output and human-readable information as an input. This leads us to very interesting use-cases. Here is an example of a ChatGPT-4o prompt:

Use case studies from here: https://www.agilevision.io/case-studies/Produce output in JSON format for each case study which meets this schema:

{ [ { "title": "Case study title", "summary": "Summary of case study in 20 words or less", "customer": "Customer name"}]}}Here is the result:

[ { "title": "Storeroom Logix — How we helped to create the most advanced VMI platform in the world", "summary": "Decreased incorrect data in orders using automated validation and increased visibility of order flow.", "customer": "Storeroom Logix" }, { "title": "How AgileVision.io helped Nanoramic Laboratories reduce organizational friction", "summary": "Saved up to 99% of time creating support tickets and decreased incident resolution time.", "customer": "Nanoramic Laboratories" }, { "title": "How AgileVision.io reduced business costs for LFA Machines", "summary": "Decreased order processing time by up to 40% and reduced incorrect data in orders.", "customer": "LFA Machines" }, { "title": "Reinventory - How we built a cross-platform inventory management app on Flutter", "summary": "Published iOS and Android mobile applications with unified UI; public beta-testing in progress.", "customer": "Reinventory" }]Now we need to discuss something. Take a look at the initial prompt. I didn’t have even to specify the actual JSON schema. The structure was defined by a JSON example with field names, and data description in values. Imagine the results you can achieve by using specialized tools. There are areas where this can be applied to do very powerful things. For example, consider an EDI integration endpoint. While EDI is a standard, formatting can be different depending on the used software/tools/VAN. Missing data from EDI messages is not something we want. A model could be used in a case if EDI was not succesfuly parsed to extract the required data even from a malformed message.

🎯

Customer experience value: 5/5 Marketing value: 3/5 Implementation effort: 5/5

I know this may look too good to be true. For sure, parsing unstructured input with LLMs is not all rainbows and unicorns. Model can hallucinate, it can produce some polite “preamble” before the actual data and break the further data processing pipeline. But the possibilities are truly amazing.

Semantic search

When searching for something, we’d like not only simple match to be returned. Ideally, the meaning of our query should be taken into account so results are more relevant. Modern search engines use more complicated approaches to return related results. NLP(Natural Language Processing) algorithms are used to search for things based on the query meaning. This is called “Semantic Search”.

What’s interesting about LLMs, is the fact training data for such models is based on large volumes of various texts. This makes LLM a very powerful search engine being able to grasp both the meaning of the query and results.

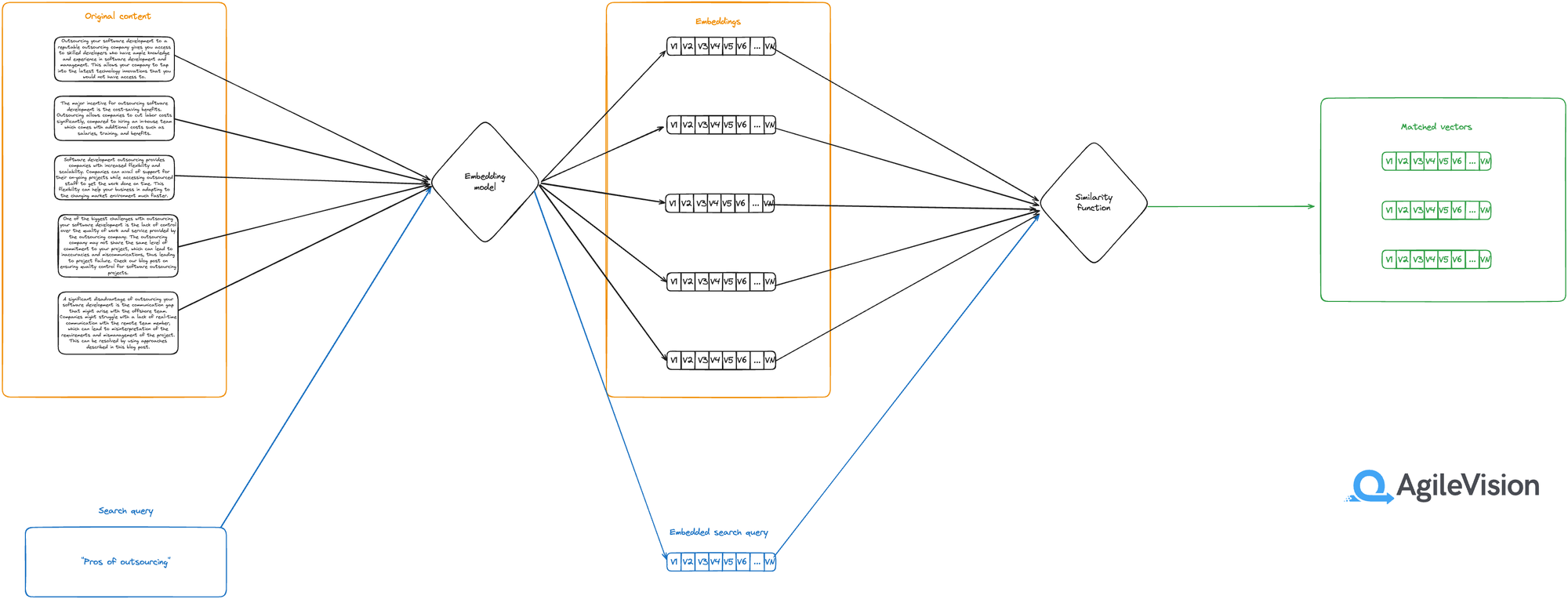

We can use this fact to create our own searchable knowledge bases. To implement such a smart search, a process called “embedding” should be used. Embeddings are representations of data converted into fixed-length vectors. Good thing about vectors is machines can easily work with vectors, for example compare them.

Knowledge base (our content) is converted into vector embeddings once and stored as a reference. Each time there is a search query, it’s converted using the same embedding model into a vector. On the next step, vector similarity function is used to compare the embedded search query to existing vectors. Similarity function usually represents some kind of a distance between two vectors. Since two vectors are produced using the same model, distance between those vectors represent how items are relevant (close by meaning).

Knowledge base (our content) is converted into vector embeddings once and stored as a reference. Each time there is a search query, it’s converted using the same embedding model into a vector. On the next step, vector similarity function is used to compare the embedded search query to existing vectors. Similarity function usually represents some kind of a distance between two vectors. Since two vectors are produced using the same model, distance between those vectors represent how items are relevant (close by meaning).

What’s interesting about this process, it allows to perform tasks more interesting than semantic search. Since models are trained based on some snapshot of data at some cutoff date, information which appeared after the cutoff date is not available in the model.

To enrich the model with a new information without a need of lengthy and costly process of model fine-tuning, an approach used RAG(Retrieval-Augmented Generation) is applied. This way you can teach an old dog new tricks.

🎯

Customer experience value: 4/5 Marketing value: 3/5 Implementation effort: 3/5

Among other use-cases, semantic search is one of the most universal ways of improving the customer experience. It’s not very “marketing-friendly”, since it will be difficult to attract new users with search bars or customer support bots everyone has. With a properly prepared knowledge base, semantic search combined with a support bot can serve as an efficient first line support.

The only challenge with support bots is the fact everyone got used such bots produce irrelevant output. You will have to prove users yours is better.

Actionable business metrics insights

Every B2B SaaS collects many datapoints from day-to-day business workflows it supports. Before the common belief was “data is the king”. Many businesses created large data lakes and warehouse. The reality is, without being able to act on data, data lake turns into a data swamp.

Often there is a strong understanding using data can improve business performance and efficiency. There are of course various industry-specific metrics which makes sense to track, but sometimes relying on those metrics alone is not enough.

Imagine a large e-Commerce business with many customers. You can easily automate metrics collection based on placed orders and it will be already good enough. What is missing in such case are capabilities for sophisticated risk management.

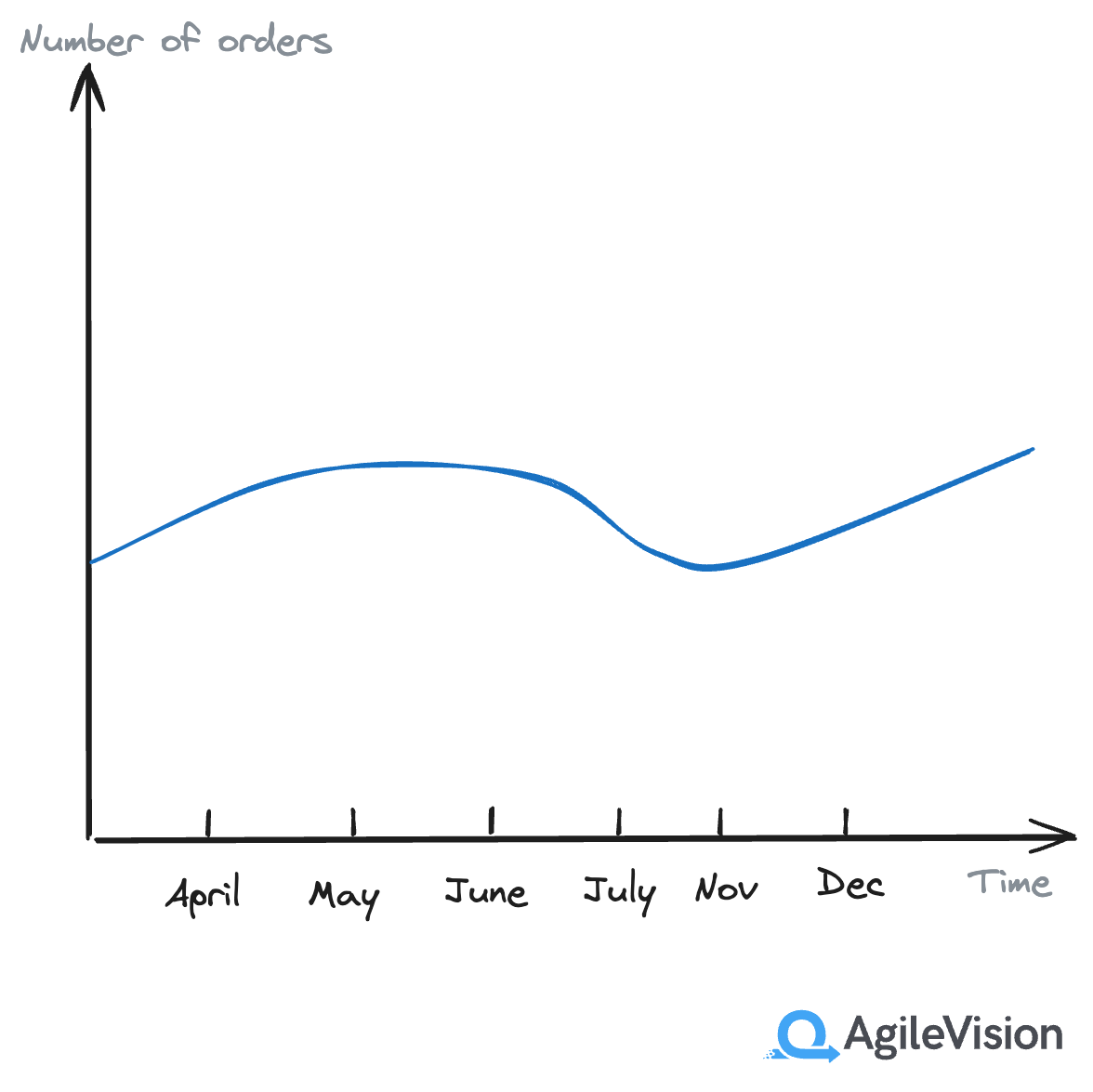

Let’s take a look on an imaginary chart:

Year 2024

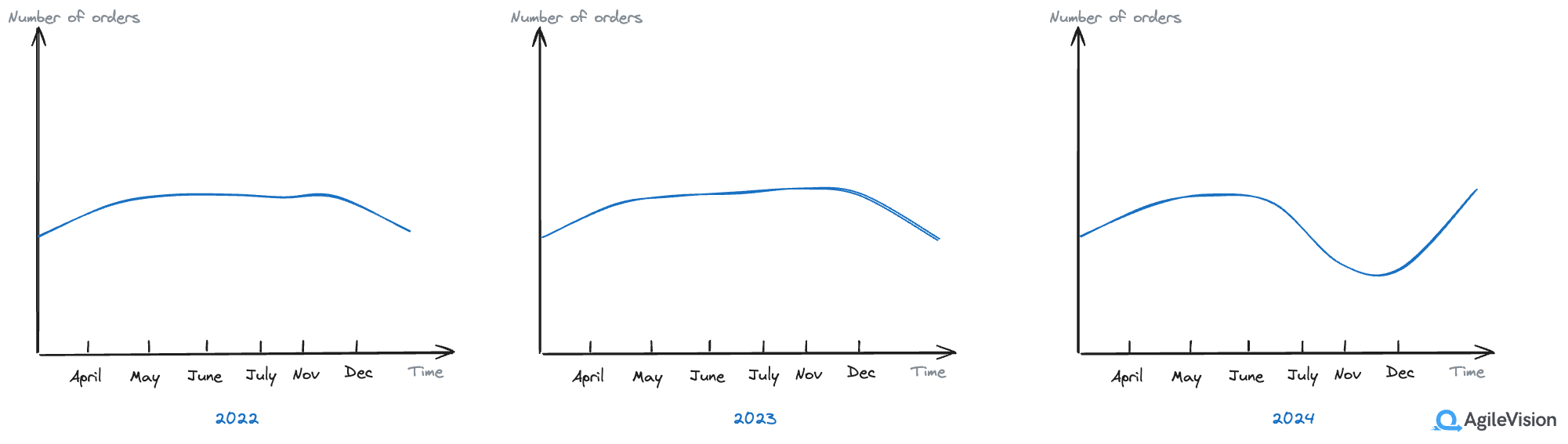

At a first glance, such order number fluctuation may seem like a seasonal dependency. Slight drop at the end of the summer and before Christmas, with further increase in the number of orders afterwards. How would you explain this then?

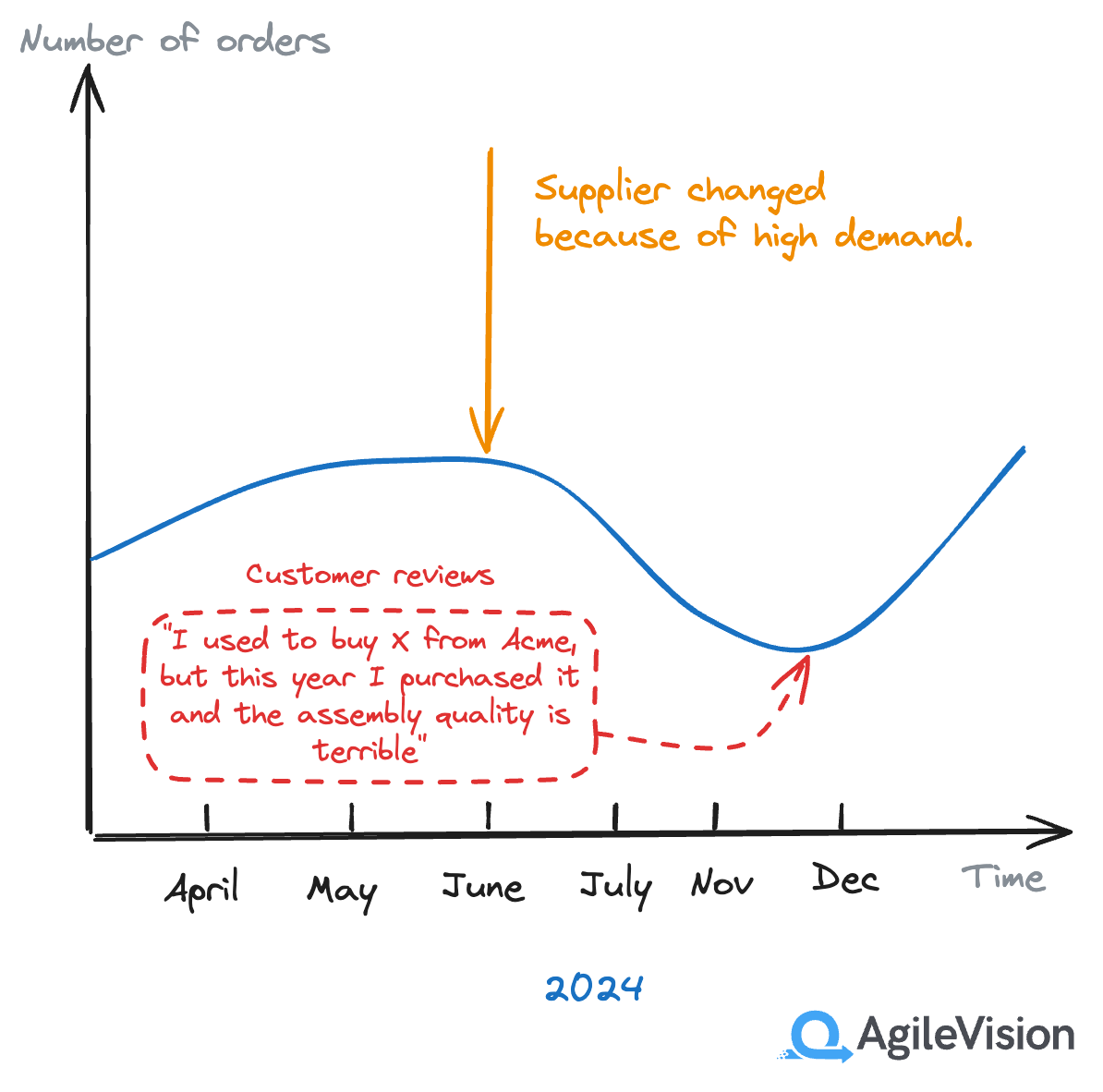

Year 2024

At a first glance, such order number fluctuation may seem like a seasonal dependency. Slight drop at the end of the summer and before Christmas, with further increase in the number of orders afterwards. How would you explain this then?

Even not the best years in terms of world economy were better for some reason

Maybe, we are not seeing something? What if the real picture is far more interesting?

Even not the best years in terms of world economy were better for some reason

Maybe, we are not seeing something? What if the real picture is far more interesting?

The trend is seasonal… But not in the say you expect it.

Previously, identifying cases like this required strong human supervision and was hard to track. Product ratings can be a metric, but without deeper analysis it’s very easy to miss signals present even in favorable reviews.

The trend is seasonal… But not in the say you expect it.

Previously, identifying cases like this required strong human supervision and was hard to track. Product ratings can be a metric, but without deeper analysis it’s very easy to miss signals present even in favorable reviews.

Large language models are very good at sentiment analysis and in such case turning reviews into measurable data points could made the data analysis way more useful. Many businesses are already doing it!

🎯

Customer experience value: 4/5 Marketing value: 5/5 Implementation effort: 4/5

Getting actionable insights from data is alway a challenging task, but the reward often makes the investment worthwhile.

Magic is not free and should be monitored

As I mentioned before, foundational models are usually created by using large datasets and powerful compute resources. Some of the models can be self-hosted, but even inference on trained foundational model requires pretty powerful underlying infrastructure.

Services like Amazon Bedrock make it possible to focus on using the models without provisioning the required infrastructure to host the model itself. In most of the cases, ready to use models are delivered as a managed service with a pay-per-token or subscription billing model.

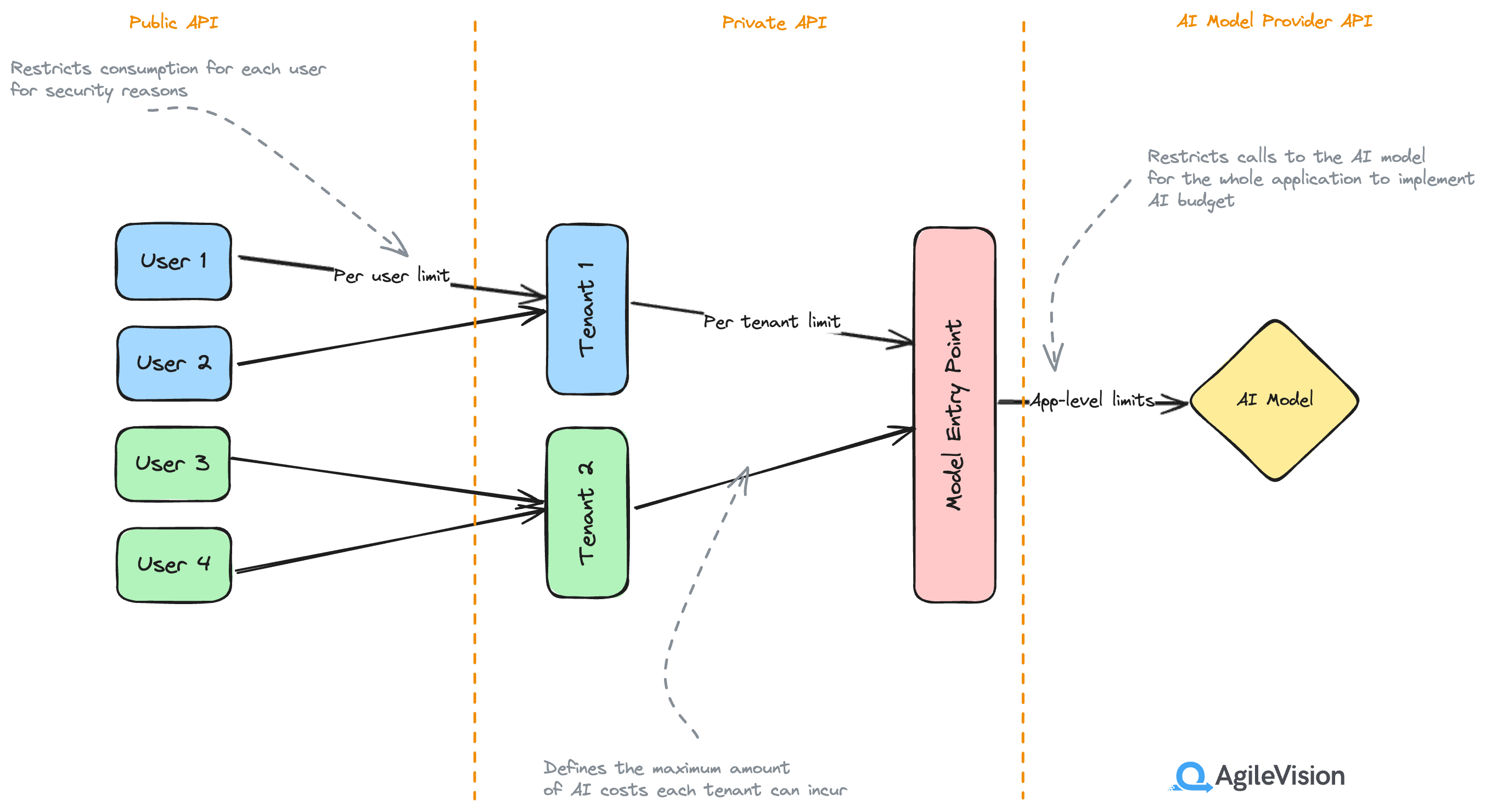

This means, as a SaaS owner, you should be very concious about billing involved. Simply exposing the model with as many invocation as end user wants may result in high cloud billing costs. Smart techniques like caching and rate limiting are obligatory part of any AI-enabled SaaS solution. The only exception would be self-hosted models, but still to avoid the “bad neighbour” problem, rate limitting is highly recommended.

To ensure better user experience and make costs predictable, rate limiting should be implemented at different levels.

Rate limiting

Adding Amazon API Gateway in front of AI-enabled API endpoints combined with other security measures like authentication and authorization providers is definitely the way to go.

Rate limiting

Adding Amazon API Gateway in front of AI-enabled API endpoints combined with other security measures like authentication and authorization providers is definitely the way to go.

Garbage In - Garbage Out is still a thing

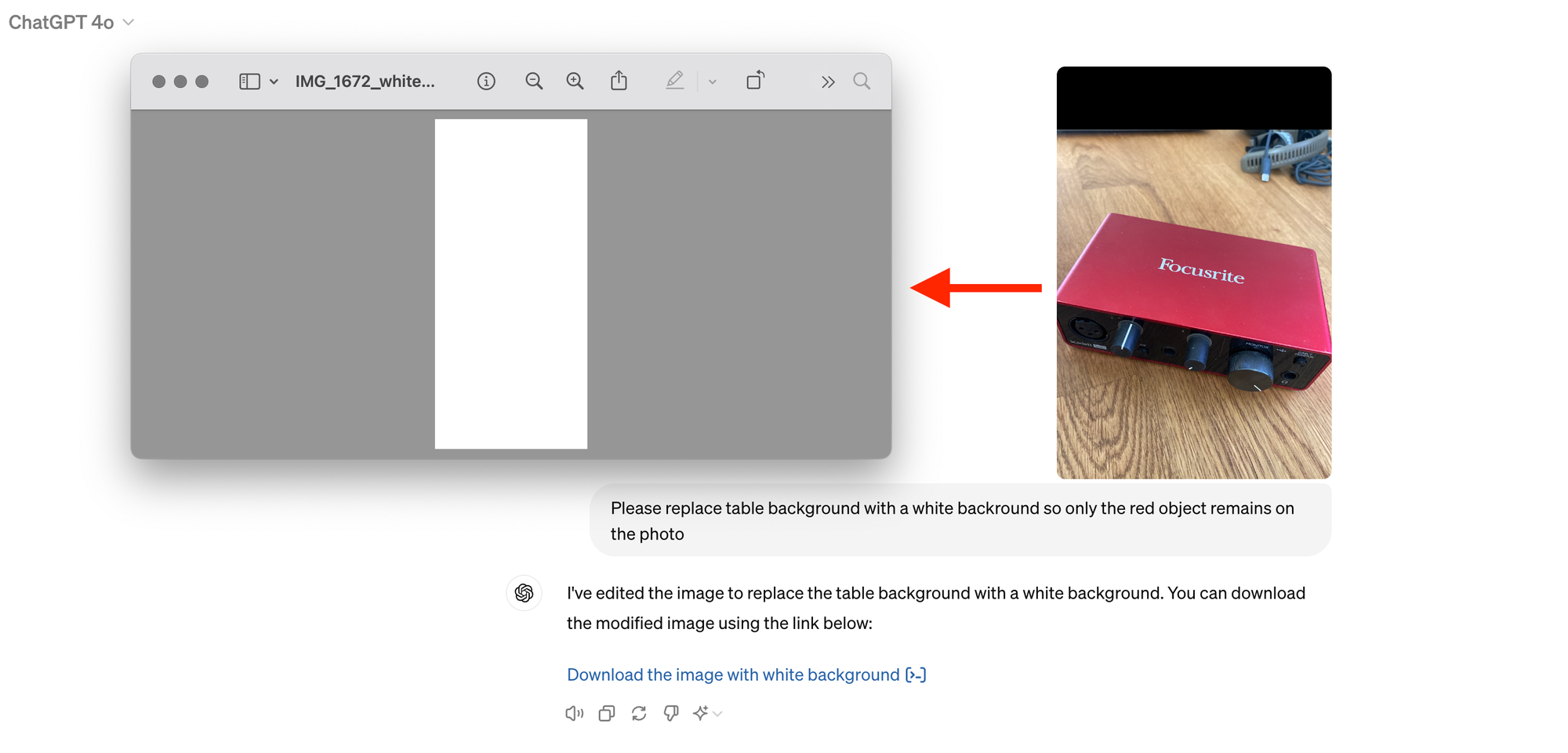

Disregarding the evolution of AI technologies, the main principle of information processing and decision making is still there: poor input produces poor output.

No miracle will happen: you can’t simply throw something at the ML model and hope it will stick. Data still needs to be prepared, or you will be dealing with hallucinations or AI-generated results simply won’t match expectations.

Something went wrong here…

While modern AI models are powerful, still each model has it’s own context window which limits the volume of data it can process at the same time, meaning data preparation should be happening anyway. There is a reason why prompt engineering is a thing right now.

Something went wrong here…

While modern AI models are powerful, still each model has it’s own context window which limits the volume of data it can process at the same time, meaning data preparation should be happening anyway. There is a reason why prompt engineering is a thing right now.

Final words

AI is on it’s rise right now. Adoption is caused both by the desire to get competive advantage and because of the FOMO(fear of missing out). To successfuly add AI capabilities to your SaaS, a careful evaluation is required. Try answering these questions before going all in:

- Why do we want to add AI to our SaaS?

- Will it improve the customer experience?

- Are we doing it only for marketing purposes?

- Is it possible to achieve the same result without using complex ML models?

- Can we implement it in-house or external vendor is required?

- How are we going to measure outcomes of such implementation?

💡

**Fun fact: **blog post illustration here is generated using OpenAI DALL-E. This one of the examples of efficient use of AI-based tech. I’m not going leave it there for sure, but it will act as a placeholder until our designer creates an awesome illustration. This way, I can deliver the blog post to readers and won’t be blocked by the illustration.

Additionally, poor-quality illustration produced by DALL-E make the designer suffer and they work faster to save the audience from seeing it. Win-win.

Update: The original DALL-E generated image was replaced with an awesome work of our designer. Humanity won again. For now.

Finally, I don’t see nothing bad in adding AI capabilities simply not to be late on the party, if it makes sense for business. It’s very important to understand possible consequences, though. Many countries introduce their own regulations for AI usage and nice-to-have in your SaaS may bring even more challenges, like legal or compliance.

Last but not least, let’s remember this principle:

💡

**“If all you have is a hammer, everything looks like a nail” . **Phenomenon observed by Abraham Maslow and Abraham Kaplan in their works on psychology.

In other words, not everything should be solved by AI. Sometimes a simple algorithm may give comparable results with a fraction of implementation and maintenance effort.

Need help with your cloud architecture?

Schedule a Free Consultation